Global Threat Model Together Day 2025: What we modeled, what we learned

Authors: Ng Yoon Yik, Lilith Pendzich, Takaharu Ogasa, Ron Müller-Knoche, Hendrik Ewerlin, Donavan Cheah, Jamil Ahmed

Overview

On October 23, 2025, we came together for the very first Global Threat Model Together Day, with three international threat modeling sessions covering the entire world! The sessions were:

- 🌏 APAC

- 🌍 EMEA

- 🌎 Americas

They were held a few hours apart with the same content, allowing everyone to participate in their own time zone.

Preparation

We began by asking: How can we best utilize global diversity?

To achieve this, we designed the Playground with a few core principles:

- A shared challenge across all regions

- A simple format accessible even to leaders new to online facilitation

- A structure that allows both participants and organizers to learn and enjoy

- An emphasis on engagement and simplicity

We planned with continuity in mind, identifying elements we could reuse or improve in future sessions.

Despite the one-month preparation period, we effectively exchanged ideas on Slack, debated key points, and practiced collaboration among leaders — sharing our strengths, preferences, and experiences along the way.

Slides:

Global Threat Model Together Day Slides

Session Structure

The format followed the "Threat Modeling Feierabend (after work)" concept from TMC DACH — a method set meets a playground, with threat modeling forming the base.

- 30-minute threat modeling introduction

- 15-minute system model introduction

- 2 × 30-minute threat modeling exercises

Method Set

The 30-minute threat modeling lecture included:

- 4 Question Framework

- Threat, Vulnerability, Risk

- ThreatPad - see also Meet ThreatPad

- STRIDE Security threat modeling

- Privacy threat modeling intro

- Usability threat modeling intro

- Protect, detect, respond, recover from NIST Cyber Security Framework

- Optional Low/Medium/High qualitative Likelihood / Impact assessment

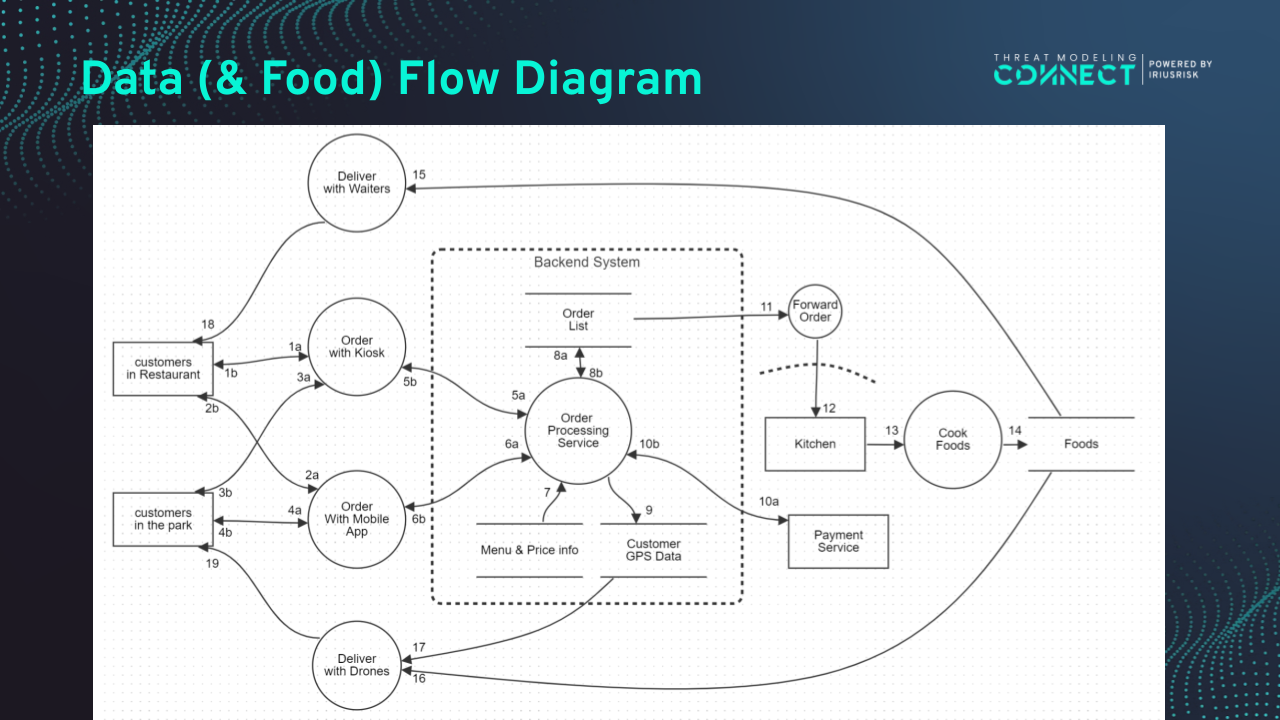

- Provided DFD made with OWASP Threat Dragon

Playground

The playground was a food delivery system in a theme park, using drones and blending IT, physical, and OT domains.

Event Day

The event was held in a relay format across time zones: APAC, EMEA, and the Americas. About 50% of registered participants joined the live sessions.

APAC setup:

- Zoom breakout rooms (maximum 5 participants per room)

- Facilitator ratio initially planned as 1:3, changed to 1 facilitator per team due to cancellations, which improved experience and reduced confusion

Session flow:

- Lecture: 30 minutes

- Hands-on exercise: two 30-minute sprints (Threat Discovery → Mitigation Discussion)

- Debrief : 15 minutes

Between the sprints, a short reflection allowed facilitators to highlight threats discovered by different teams, increasing engagement and perspective-sharing.

Breakout Session Setup

In the breakout sessions, participants saw a screen like this:

On the left side, participants could see ThreatPad. This is where facilitators gathered the threats and mitigations from the participants' contributions. Because ThreatPad is so slim and designed for mobile use also, there's enough room for a lot of context in a screen share.

On the right side, we had the system model and a method cheat sheet. Also, there were instructions about the task.

Facilitators’ bird’s-eye view:

In the background, facilitators used a tool that showed what was going on in all the sessions at a glance. The overview uses that ThreatPad can easily be embedded as an iframe. This is how 6 APEC sessions looked in the bird's eye view as threats and mitigations kept coming in:

Results

All threats and mitigations contributed by groups:

What Went Well

- Collaboration among TMC leaders was energizing and educational

- Two-sprint structure with reflection increased engagement and learning depth

- Physical/OT aspects (drones) encouraged thinking beyond technical threats

- ThreatPad’s simplicity facilitated experimentation and accelerated learning

- Overview feature allowed facilitators to observe multiple teams effectively

- Facilitators rotating among teams provided diverse viewpoints and cross-learning

- Strong foundation for future events, enabling faster and smoother organization

Areas for Improvement

- Provide a short ThreatPad tutorial (some participants confused threat vs. mitigation sections)

- Consider trimming CrushCourse content to better tailor to each Playground

- Maintain 1 facilitator per team ratio

- Encourage more active engagement in Slack

- Conduct a participant survey (e.g., via Luma) for detailed feedback

Overall Reflection

Our goals — fostering international collaboration and gaining experience co-hosting a global event — were clearly achieved.

Most importantly, this was the first-ever globally co-hosted TMC event, marking a significant milestone.

This experience lays the groundwork for continued collaboration, and we aim to build on this momentum to expand diversity-driven learning and co-creation across the global TMC community.

🌏 APAC Experience & Learnings

General:

- Identifying threats was generally straightforward. Most participants understood the DFD and could easily generate threats. First-timers were slower but caught up with facilitator support

- Breakout rooms worked well, but teams should scope the system before starting

- Providing examples for each threat category could help participants flow into the exercise

- “What’s enough” is subjective — often discussed during debriefs, e.g., “How much mitigation is good enough?” And there is no right answer to this

- Language is a barrier. In case we have non-English audiences (possible, since nothing stops anyone from joining a TMT Day session outside their geography), may want to have slightly bigger groups? (This might manifest differently in EMEA, and maybe less of an issue in Americas)

- Profiling participants by proficiency may help mix and match groups more effectively

ThreatPad:

- Populating example threats can teach tool usage

- Emphasize that no “model answer” exists

- Groups may not use ThreatPad to full capacity; clearer instructions could improve this

DFDs:

- We could be explicit with the groups to let them make assumptions. We may not be able to run through every assumption, but we could illustrate with an example.

Facilitators:

- We eventually had about 50% conversion. Five facilitators and one host (total six) allowed for an average of 1 facilitator per group, but even handling this can be a bit difficult! (Some of us with no secondary screen were alternating screens quite furiously throughout)

- It may be nice to identify potential facilitators to help to keep the facilitator:participant ratio good

🌍 EMEA Experience & Learnings

EMEA capped registrations at 80, but received a surprisingly low participation rate: 19 participants attended. We had five facilitators and spontaneously decided to split into two groups, so two facilitators shared one group and one facilitator got to hop between the two sessions.

It was really enlightening to see how the different sessions unfolded! Before us there was APEC who had split into 6 sessions and I got to observe those in the bird's eye view, as threats and mitigations kept coming in. What's kind of interesting is that they mostly seemed to follow a threat-mitigation-threat-mitigation (⛈️☂️⛈️☂️) pattern.

EMEA on the other side spent 30 minutes on threats-threats-threats; then 30 minutes on mitigations-mitgations-mitigations (⛈️⛈️☂️☂️). EMEA 1 followed a more structured approach. They iterated per element and also used ThreatPad tags to structure their findings. EMEA 2 on the other hand brainstormed threats. Their threat elicitation went extremely bubbly, with new threats discovered and recorded every few seconds.

In the mitigation phase, EMEA 2, who had previously found lots of threats with brainstorming, chose to do the optional likelihood / impact assessment. Their previously very fast experience turned slower. Also, they found follow-up threats and added even more threats. So their end result is dominated by a lot of threats with no mitigations found. While their threats are really impressive, the overall impression is kind of admiration for the problem-ish. EMEA 1 on the other hand provided a good threat-mitigation ratio. They also decided to tag their mitigations with prevent/detect/respond, providing additional oversight.

When we contrast this with the APEC threat-mitigation style, we see less unmitigated threats there by design, because they instantly mitigated their threats. On the other hand, focusing on threat detection seems to promote flow.

Wrapping up, we have interesting meta learnings about group sizes and how threat-mitigation-threat-mitigation ⛈️☂️⛈️☂️ VS threat-threat-mitigation-mitigation ⛈️⛈️☂️☂️ style, risk assessment, and detailed write-up VS jotting down quickly influence results and may - as one effect - end in admiration for the problem or not.

We'd like to thank all our participants for the fun experience! You are awesome!

We should provide collaborative massive multiplayer intercultural online threat modeling with different approaches as a service! 😉

🌎 Americas Experience & Learnings

General:

- We had approximately 30+ participants. Most participants coordinated easily in the breakout rooms. We had 7 breakout rooms. A few participants had connectivity issue; therefore, they logged back into the main Zoom meeting, and then we placed them in the breakout room.

- In the training/introduction part, I (Jamil Ahmed) shared with the participants that they can list some basic functionality-related threats as well as some more technical security threats. Most participants were able to easily identify the basic functionality threats.

- I (Jamil Ahmed) kept switching to different break-out rooms and spent more time with participants in the breakout rooms where we did not have facilitators.

- We had 3 facilitators in total, but 7 breakout rooms. I encouraged them to have a leader for each breakout room especially for the room where we did not have a facilitator.

- Some participants had an issue to navigate through the ThreatPad, but then they easily learnt how to use it. One Team logged into the wrong ThreatPad canvas. Instead of America-Team2 , they logged in to APAC-Team-2. But we corrected it for them.

- After the time was up, we brought all participants back into the main Zoom meeting room and a few participants discussed the threats that they had identified.

Takeaway:

- We could provide a sample Threat Model with security technical threats, and then also a challenge, so that when the participants are solving the challenge then they can relate with the sample Threat Model in order to find more technical threats.